Nicolas Ferré

Data Scientist Expert

Smart parking management with AWS IoT

Monitoring a parking lot can be accomplished using various methods. While dedicated installations and sensors are one option, modern approaches can leverage machine learning, cloud and IoT technologies to implement more flexible and cost-effective solutions.

This blog post will elaborate on how we, at Arhs Spikeseed, implemented a parking management solution using these technologies, and the architectural choices we made to meet the requirements.

Context

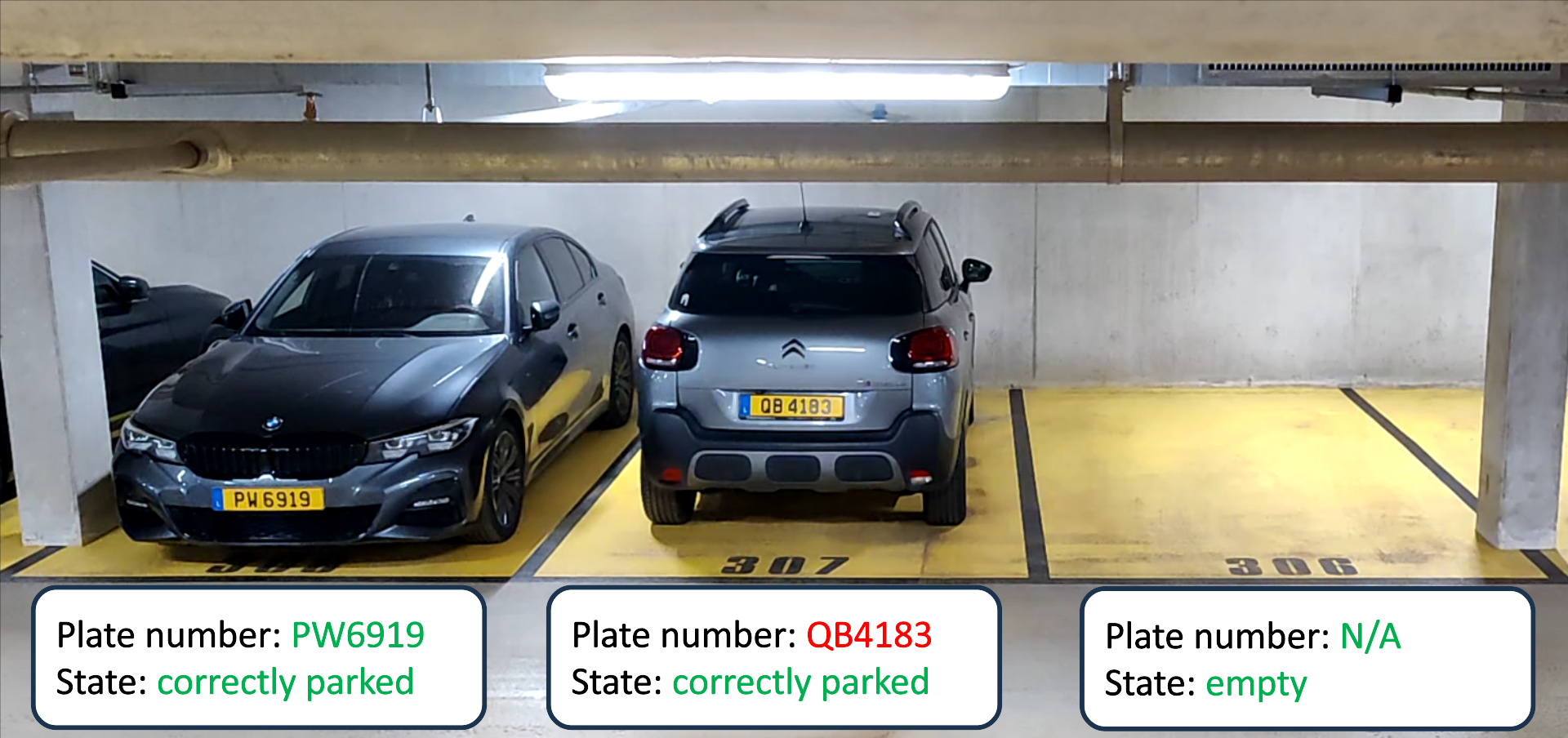

The goal of the automated system we have built is to manage and monitor the state of multiple parking lots across several countries. More specifically, the solution is able to give:

- A near real-time visual on all parking spots.

- The state of each spot (e.g., if a vehicle is parked, its plate number, …).

- The vehicles that are not authorised to park.

- The vehicles that are incorrectly parked (e.g., a vehicle parked across two spots).

These information are extracted on parking lot side and made available in an administration panel so that operators can take actions when necessary.

Global workflow

Now let’s see which solution we implemented to meet the needs.

Chosen approach

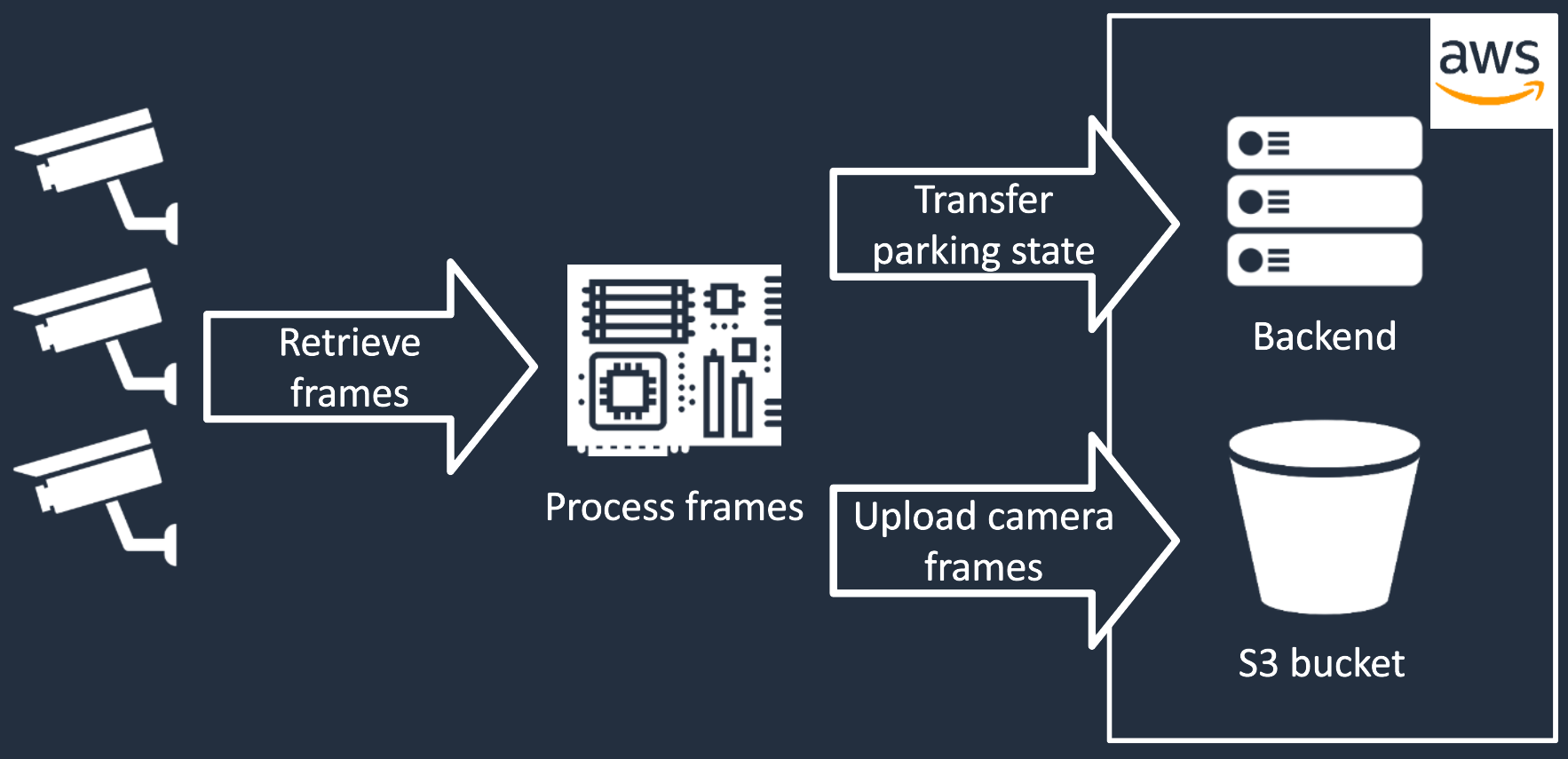

To monitor the parking spots, we chose the following approach:

- A set of cameras is installed at each parking lot, and each camera tracks several spots.

- An edge device at each parking lot retrieves frames from each camera at regular intervals, and computes the state of each spot using machine learning models.

- The spot states are then sent to the backend deployed in an AWS environment.

- The cameras frames are also uploaded in a S3 bucket at regular intervals, to allow visualization of the parking lot from the administrator panel.

Data transfer

Two main strategies can be applied to analyze the camera stream: processing frames in the cloud, or locally at parking site.

AWS provides compute and video transfer services such as Amazon Kinesis Video Streams, so we could then consider sending the video streams to the cloud and processing them on EC2 instances. However, we chose to process the frames on parking lot side instead of sending them to the cloud for these reasons:

- Wired and Wi-Fi connections are generally not available in parking lots, so we need to use a 4G connection to send

the video streams to the cloud. To maintain high detection quality with the

used machine learning models, a high frame resolution is necessary. However, several issues can arise with a 4G

connection:

- Because high frame resolution is used, significant data quantities can make the 4G subscription costly.

- Data loss due to 4G connections can affect the quality and frequency of received images.

- Latency can occur, especially if the network quality is poor.

- Sending all frames to the cloud can also raise privacy and security concerns. Processing on parking lot side ensures only necessary information is sent to the cloud, and frames can be preprocessed (e.g., blurring certain parts) before being sent.

Detection process

Now that we have an overview of the system, let’s focus on the detection process that extracts relevant information from camera frames.

End-to-end strategy

The following diagram shows how the detection process is performed.

Every 2 seconds, the edge device retrieves a frame from each camera stream and applies vehicle detection and plate recognition. This constructs a state of each spot, which is saved in-memory. Here is an example of state for a specific spot:

1

2

3

4

5

{

"id": "SPOT1",

"plate_number": "ABC1234",

"car_state": "CORRECTLY_PARKED"

}

Information like the state of the spot and the detected plate number (if a vehicle is found) is saved. Multiple states can be detected:

- A vehicle is correctly parked in the spot.

- A vehicle is incorrectly parked in the spot, taking up more space than the spot.

- A vehicle is detected in front of the spot, such as when a vehicle drives through the parking lot without parking.

- No vehicle is detected in this spot.

Then every minute, all the collected states are consolidated in a unique state. This consolidation smooths the detection results and increase the confidence of the constructed state. The model that recognizes plate numbers may sometimes produce errors during only few frames (e.g., recognizing “ABC1234” as “ABO1234” due to brightness variations). The consolidation process takes all detected plate numbers of the spot during the last minute and keeps the most frequently detected plate number.

The consolidated state is then sent to the backend, along with the latest frames from each camera.

Frame processing

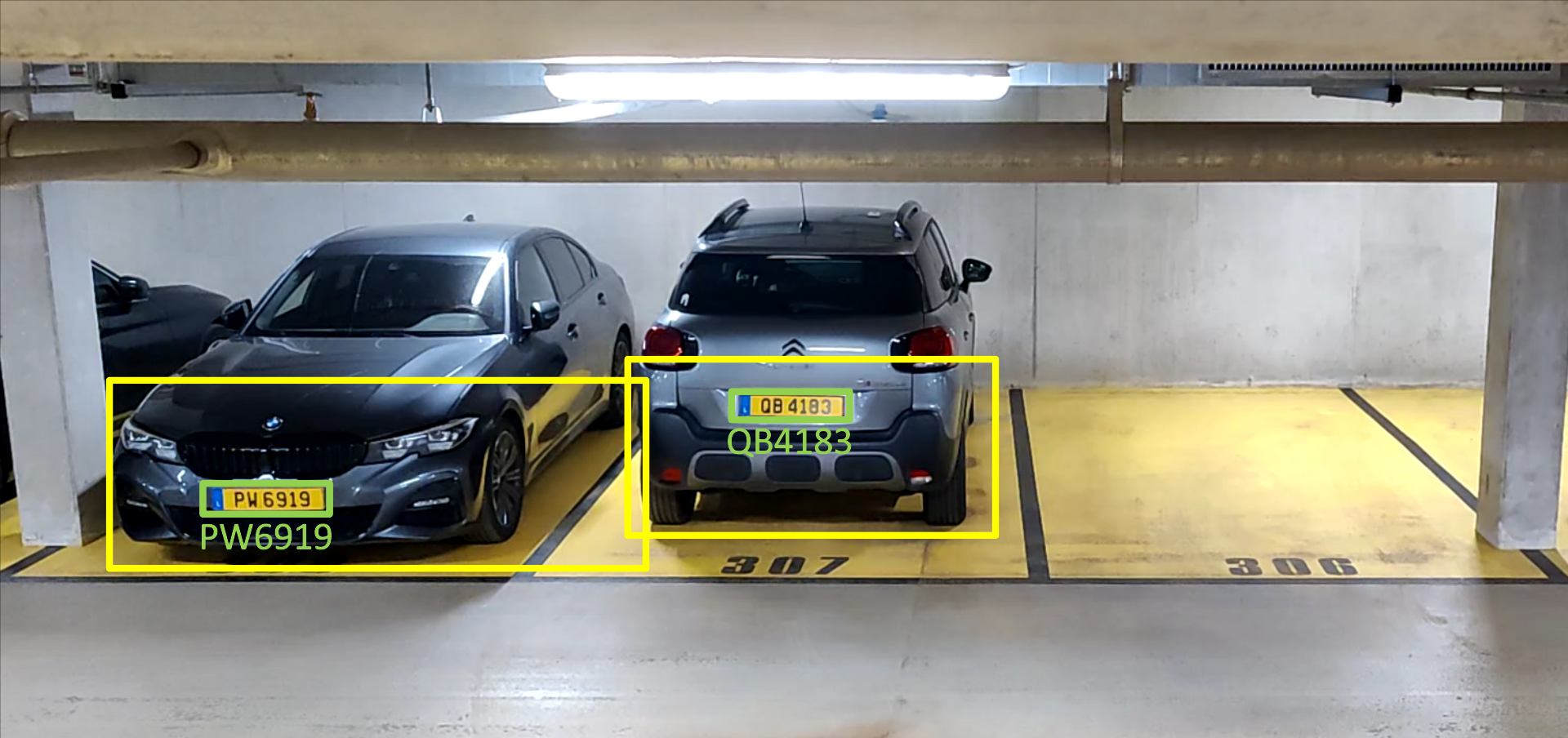

When a frame is retrieved from a camera, the first step is vehicle detection. This is performed using an object detection model called YOLOv7 tiny, which returns the bounding box coordinates of each detected vehicle in the frame (in yellow in the following image).

Next, plate detection is applied. The text recognition model PaddleOCR is run on each zone delimited by the vehicle bounding boxes. This model detects the bounding box of the texts (green boxes in the below image) and recognizes the characters. To increase recognition quality, it is important to limit at maximum the zone on which the model is run. As it is unexpected to see a license plate on the top part of a vehicle, we reduced the recognition zone to only the bottom part (yellow boxes in the below image).

With the coordinates of each vehicle and license plate, we can now calculate the state of the spots. Since the camera position and orientation are fixed, the coordinates of all spots are encoded in the device configuration (in red in the below image). Using boolean logic, we can compare the coordinates of the vehicles and plates with the parking spots to determine on which spots the vehicles are parked in and if they are correctly parked.

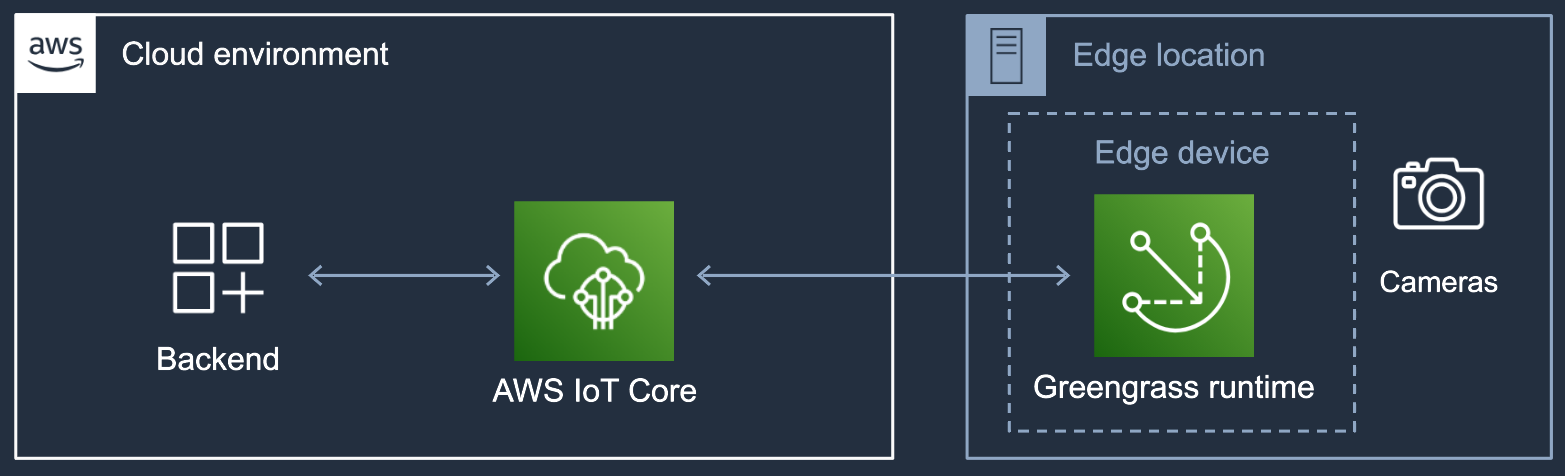

Management of the edge devices

The edge devices are in a local network of the parking lot that cannot be accessed from outside for security reasons. This means that to deploy a new version of the detection process or to perform debugging on the edge device, it is necessary to physically go to the parking lot and access the edge device using a computer connected to the local network.

While this can be done on a small scale and when the operator’s office is close to the parking area, this method is not flexible for handling many devices across multiple countries.

To remotely monitor, debug, and deploy components on the entire device fleet, we implemented an architecture using AWS IoT service. The Greengrass runtime is installed on the edge device and handles communication with AWS. Even if the device is in a private network, Greengrass allows remote management since the runtime performs calls to AWS, and never the reverse.

Greengrass regularly synchronizes the list of components running on the device with the configuration created on AWS side. Additionally, AWS IoT provides fully managed MQTT queues to facilitate sending JSON messages between the device and the AWS environment.

Components deployed on the edge device

Three Greengrass components are deployed on the devices to run and monitor the camera frame processing: the application that performs the detection process, the log manager to send component logs to AWS, and the secure tunneling component to remotely debug the devices.

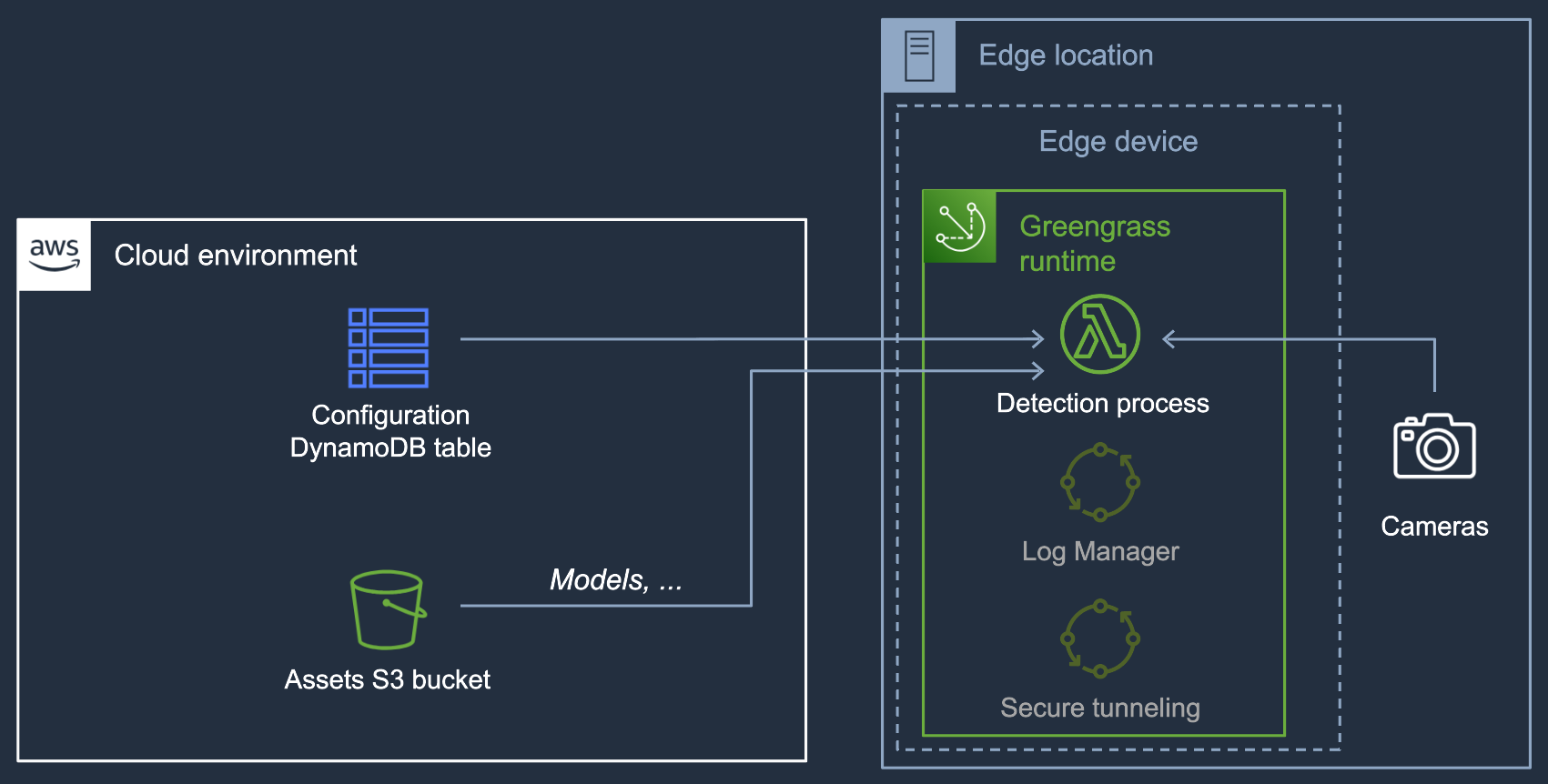

Detection process

The main component is the detection process application that retrieves frames from the cameras, performs the detection using machine learning models, constructs the parking state, and sends it to AWS.

The component is a Lambda function written in Python and deployed in the AWS environment. Unlike a classic Lambda function run in AWS, a Lambda function wrapped in a Greengrass component and deployed with AWS IoT has several differences, including:

- The ability to run indefinitely, without the 15-minute limit.

- No support for Lambda layers, requiring system or Python dependencies to be installed on the device using its Linux shell.

When the component starts on the device, it performs the following actions:

- Retrieves the device configuration from a DynamoDB table, including the list of cameras and model configurations.

- Downloads necessary files from an S3 bucket, including the machine learning models.

- Connects to the RTSP stream of all the cameras using the OpenCV library.

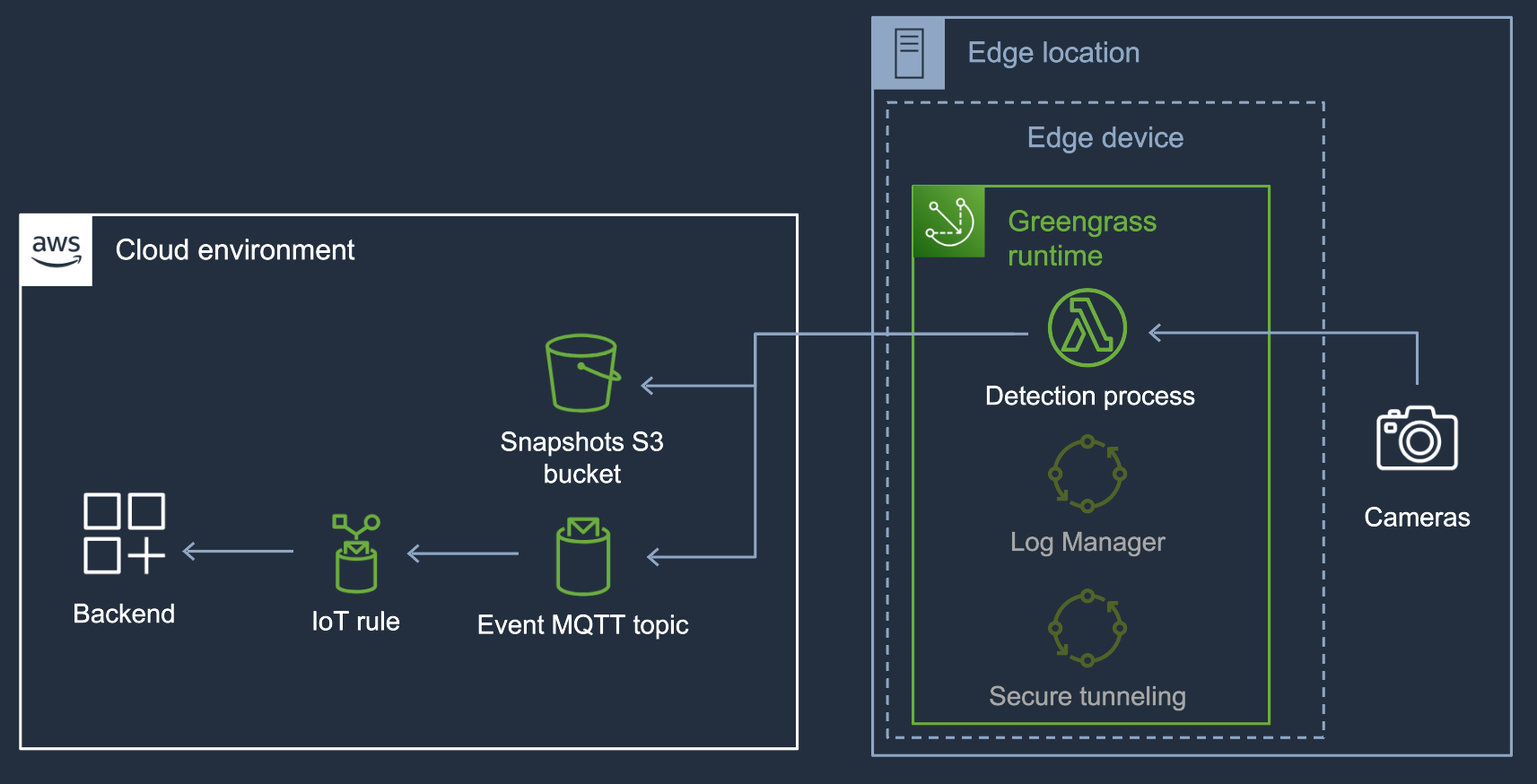

The detection process then starts. Every 2 seconds, it retrieves a frame from each camera, performs machine learning-based detection, constructs the parking state, and sends it every minute to an MQTT topic handled by AWS IoT. This data is then routed to the backend using an IoT rule. The process also sends a snapshot of each camera stream to a dedicated S3 bucket every minute.

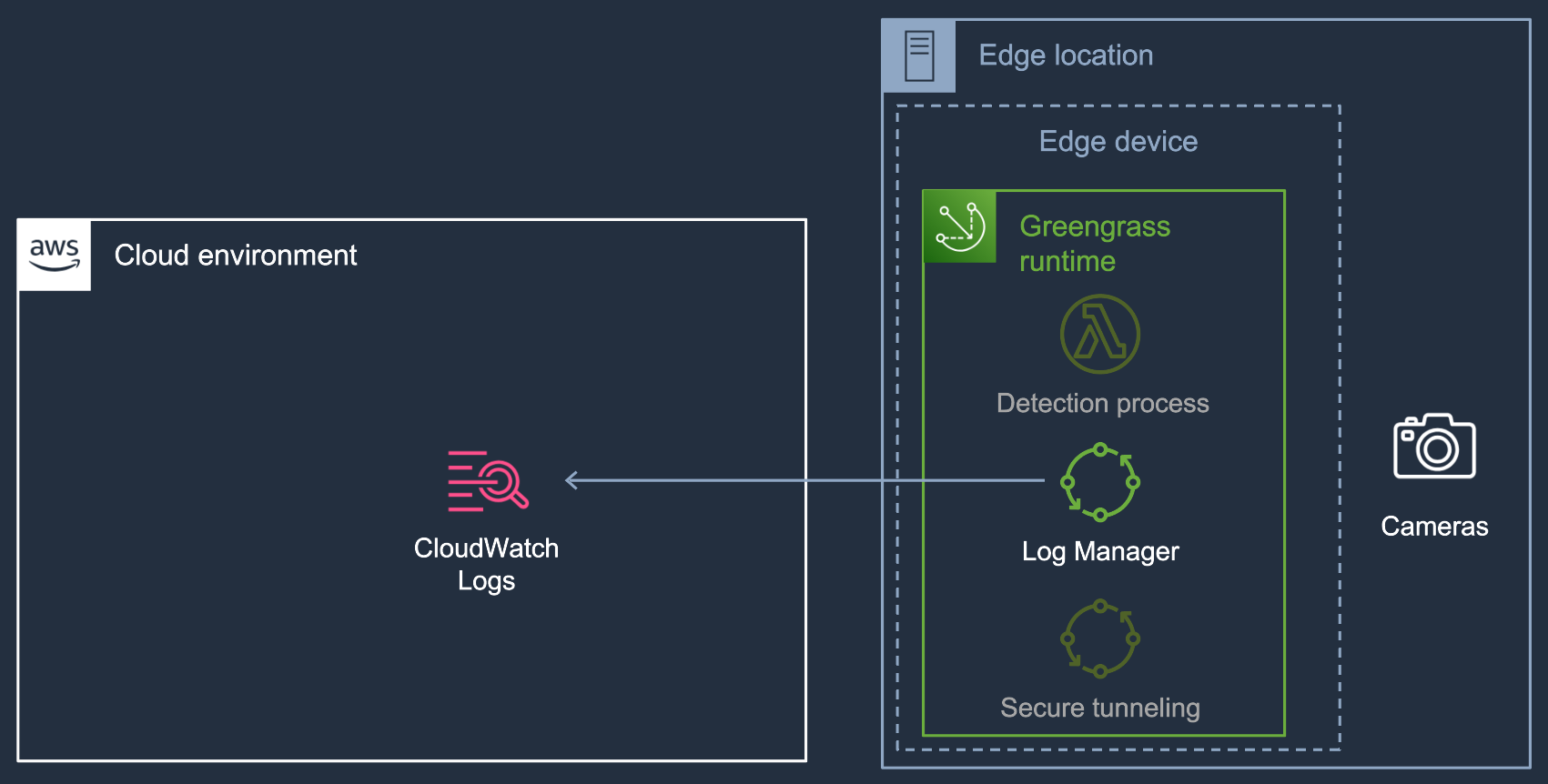

Log Manager

All Greengrass components write their logs in a dedicated folder on the device. By default, you need to connect to the device to access the logs.

To access logs more conveniently, we have installed the Log Manager component. This component sends the logs to CloudWatch at regular intervals, eliminating the need to connect to the device to read logs.

Note that this component is a built-in Greengrass component provided by AWS, not a custom Lambda function like the detection process.

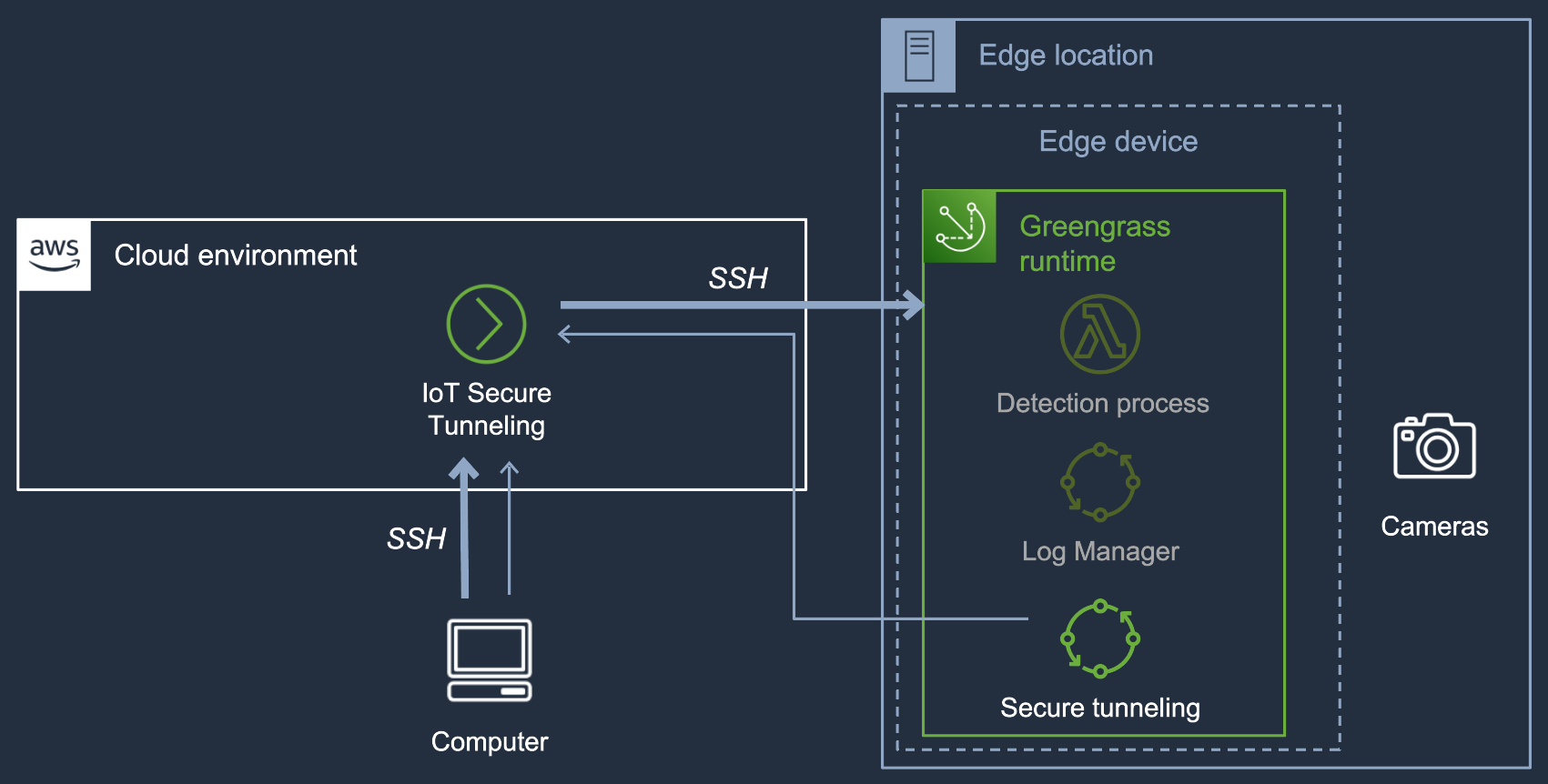

Secure tunneling

Sometimes it is necessary to remotely access the device to debug or install new dependencies. As previously explained, the edge device is located in a private network that is not accessible from outside. Fortunately, AWS provides a built-in Greengrass component that allows secure tunneling with an external machine.

Every time you need to access your device, you have to create an IoT Secure Tunneling resource on AWS (e.g., in the AWS Console). This resource has a limited lifetime (up to 12 hours). Once created, the Secure Tunneling component installed on the device opens a connection. Then, on an external machine, a local proxy provided by AWS can be installed and run to also open the connection.

Once the connection is established from both sides, it is possible to open a session to the edge device. Several protocols are supported by AWS IoT, such as SSH and RDP. Note that instead of using the local proxy, it is also possible to access the device though an SSH session directly with the client integrated into the AWS IoT Console.

Conclusion

By leveraging machine learning, cloud services, and IoT technologies, Spikeseed has successfully implemented an automated parking management system. This solution meets the need for near real-time monitoring of a parking lot, and also ensures low latency, cost-effectiveness and data privacy by performing processing at the edge. Additionally, with AWS IoT and Greengrass enabling secure and remote management of the edge devices, the system is scalable and maintainable across multiple locations.